Using light to move data over shorter distances is becoming more common, both because there is much more data to move around and because photons are faster and cooler than electrons.

Using optical fiber for mission-critical communication is already well established. It has been the preferred PHY for long-haul communications for decades because it doesn’t suffer from the attenuation losses of copper. It also has emerged as the dominant way of transferring data back and forth between servers and storage because it is nearly impervious to interruptions, extremely fast, and requires less energy than copper. Today, all the major foundries are producing photonics ICs (PICs), and those devices are now getting a serious push as a way of reducing the cost of lidar.

PICs perform the processing to bring optical connections together, from spectrum splitting to optical-electrical conversion. They enable higher bandwidth with low signal loss, along with a cooler operating environment. Designers select the wavelengths for PICs depending on the intended applications, which can be achieved by choosing from a range of materials, including Si/SiO2, silicon nitride, and indium phosphide.

“Because of datacom pushing the foundries, photonic dies are becoming cheaper and more readily available, said Gilles Lamant, distinguished engineer at Cadence. “If the big fabs start having lines dedicated to photonics, they’re going to want to keep them filled, and that’s going to push the price of a photonic die down, and it will tremendously help other markets.”

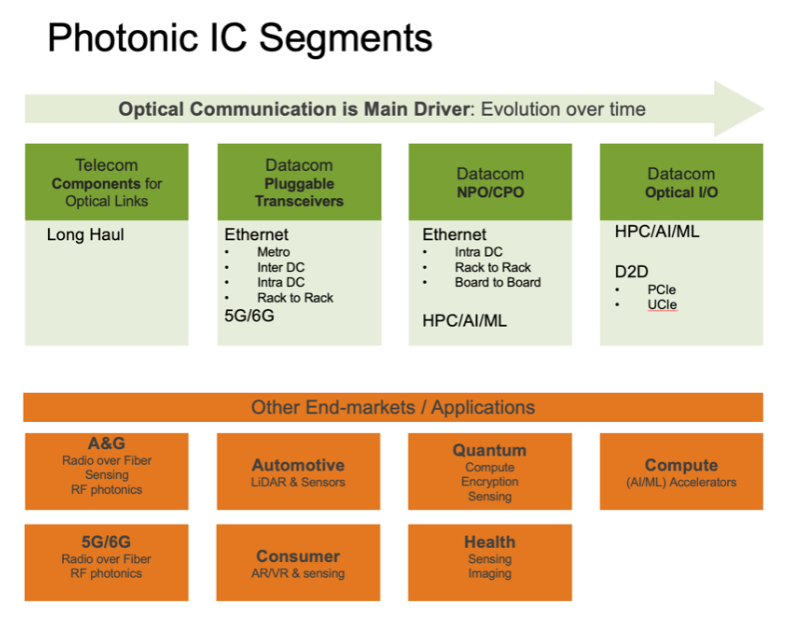

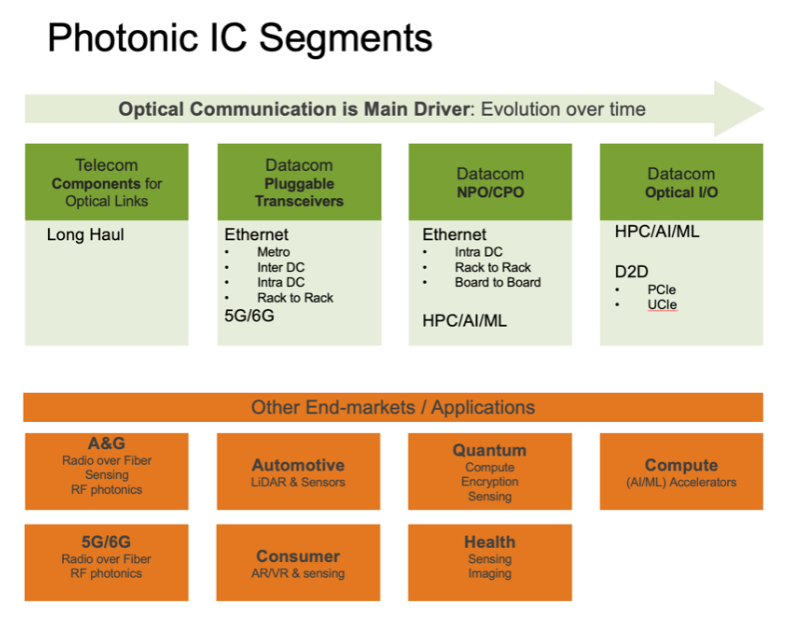

Fig. 1: Segments that use photonics ICs. Source: Synopsys

New photonics applications include everything from encoding and networking in quantum computers to sensing devices that appear everywhere from factory inspections to medical devices and ADAS.

“Most people know these photonic integrated circuits being used in transceivers for data centers, where you translate the electrical, high-speed signal into an optical signal,” said Twan Korthorst, executive director of photonic solutions for Synopsys. “Once you’re in the optical domain, you can go almost for free over very long distances. That’s what everybody is excited about — especially now with the surge of AI, as everyone is investing in even bigger data centers, connecting more compute power and memory to train and infer AI models. For that, people are accelerating their activities with optical transceivers using integrated photonics chips. That’s the starting point, but if you can build a chip that allows light to be transported, processed, and manipulated, you also can use it other places than just optical transceivers.”

Using photonics to decrease the cost of lidar

One commonly-cited use case for photonics is lidar (light detection and ranging), which underlies most advanced ADAS sensing, but it also has applications in factory-floor management, drone detection for counter-intelligence, and seabed mapping (a.k.a. bathymetry). It is as integral to NOAA’s data collection as it is to studying repetitive motions on assembly lines.

Radar and lidar both take advantage of the ability of EM waves to reflect off surfaces, allowing for the detection and detailing of objects and topological features. While the physics to realize such systems is complicated, the principle is easy to grasp.

“Essentially, lidar is a ranging device, which measures the distance to a target,” according to Synopsys’ website. “The distance is measured by sending a short laser pulse and recording the time lapse between outgoing light pulse and the detection of the reflected (back-scattered) light pulse.”

The result is a point cloud that looks like a topographic-style 3D image, with the wavelength of the light and the number of laser pulses per second determining the fineness of detail. Lidar uses wavelengths between 194THz to 750THz, also known as near-infrared (NIR, 800nm to 1,550nm), visible light (400nm to 700nm), and mid-infrared (Mid-IR, above 2,000nm). The choice of wavelength depends on the application, desired range, resolution, and environmental conditions. Because longer wavelengths offer less scatter, conventional wisdom was that 1,550nm is better for penetrating fog. Yet one study concluded there is less than a 10% difference “between the extinction coefficients at 905nm and 1,550nm for the same emitted power in fog.”

One could argue, however, that when measured in terms of brake initiation, even that small distinction is critical. “There are several different lidar implementations using photonics,” said Tom Daspit, manager, product marketing at Siemens EDA. “One that we see a lot in the Bay Area is the spinning disk on top of cars. It’s a laser that spins, and there’s a receiver that spins with it. There are lidar devices in development that will be buried in rearview mirrors, headlights, or other places in the car. Tesla doesn’t use lidar, but it uses optical. It looks at pictures and tries to process them. Some of the other self-driving vehicles will be using lidar. It depends on how they intend to implement their goals. To get that in a car, reliability has to be increased, and costs driven way down.”

High costs have dampened enthusiasm for lidar. “The biggest controversy about lidar is that price point,” Cadence’s Lamant said. “To go on your car, it needs to be cheaper than it is. Right now, it is way too expensive. Most of the lidar companies are making good progress, but they still have a problem with the price point.”

Others agree. Elon Musk rejected lidar for Tesla vehicles, claiming it is too expensive. Instead, Tesla utilizes 2D computer vision. Lidar vendors have pushed back, claiming that 2D imaging does not capture the world comprehensively enough to be fully safe on the road.

“A camera might miss a particular object because of reflection of the sun or oncoming headlights, but lidar negates that reflectivity and can detect a person in the middle of the road,” wrote Sudip Nag, at the time corporate vice president of Software & AI Products at Xilinx. (Nag is now corporate vice president of the AI group at AMD.) Still, cameras shouldn’t be counted out. Last year, NVIDIA researchers published a paper demonstrating how a camera-based system could handle 3D perception.

Currently, the predominant hardware for lidar is a combination of compact solid state and bulkier mechanical techniques. According to Yole Research, it is being challenged by MEMS technology, with RoboSense as a leading player. MEMS can allow for much smaller devices, which eventually should replace the large spinning discs on vehicles and help drop the price point. It’s an evolution similar to backyard satellite dishes being replaced by desktop dishes. Still, there is concern that MEMS are too small for road-worthy detection. MEMS lidar vendors, such as Germany’s Blickfeld, argue that is easily solved by enlarging MEMS and fine-turning spatial filtering.

Despite concerns, lidar is still expected by some to be the technology of the future. Yole predicts the global lidar market in automotive to grow from $538M in 2023 to $3.6B in 2029 at a 38% CAGR. The lidar market is currently dominated by Chinese companies RoboSense and Hesai, and most of the worldwide growth is predicted to continue to be propelled by Chinese OEMs, which will release 128 lidar-enabled car models this year and next.

The current range of lidar costs is estimated at $500 to $1,000, according to a recent Nature Communications paper. “This downward trend is expected to continue, potentially reaching the $100 range in the coming years,” said the authors. “Global LiDAR penetration now is in the order of 0.5% of all passenger cars sold. We anticipate a surge in this figure to exceed 10% as the selling price of LiDAR approaches $100.”

One answer to reducing fabrication cost is silicon photonics, because such chips can be made with CMOS processes. “Lidar is a natural evolution of everything that has been done so far in the data center,” said Synopsys’ Korthorst. “If you Google solid-state lidar, or silicon photonics lidar, you will hit an enormous amount of research, startup companies, successes, failures. There’s a lot of activity in using silicon photonics. You now can build a solid-state lidar, where you use the same manufacturing as you would for optical transceivers and the same design tools. On the flip side, you also need to take into account that, because you don’t see it, you need proper eye safety.”

The industry’s answer to eye safety concerns is that lidar products follow the Class 1 eye-safe (IEC 60825-1:2014) standard, with safety further ensured by lowering the power of the lasers in balance with their wavelengths (generally 1,550nm) to keep within eye-safe parameters. Nevertheless, Blickfeld said that there could be unusual circumstances where power might be amplified, however unlikely.

At the moment, photonics still plays a small role, although it is poised to grow. “Optical technology, including FMCW (frequency-modulated continuous-wave radar), should not be used before 2028, and only in low volumes,” according to Yole. “The technology is still emerging and will have to provide a better cost vs. performance ratio than hybrid solid state.”

Indeed, much of the cost for lidar comes from the high compute requirements for data processing, which FMCW vendors are trying to lower through more integration, as well as FPGA vendors, which are integrating DSPs.

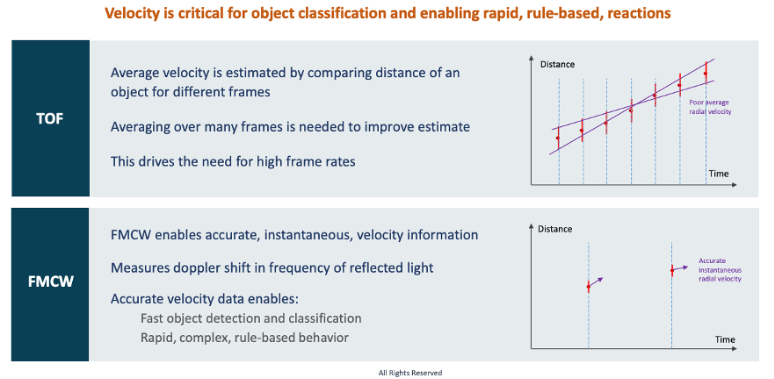

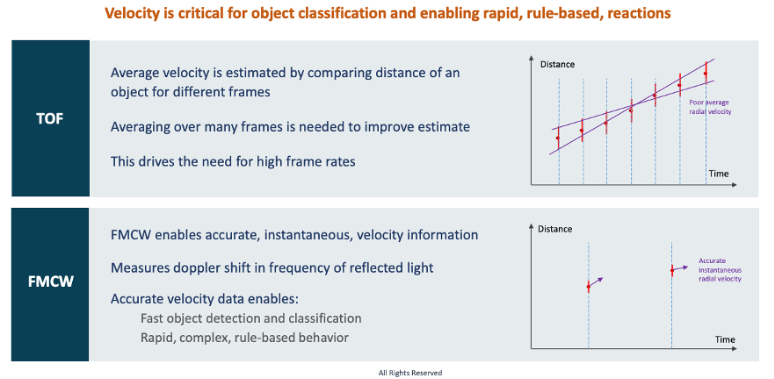

FMCW is used for both lidar and radar. While the first patent for it was issued in 1928, lately it’s being developed as the technique that may solve the cost problem for lidar. The classic technique for the industry is time-of-flight (ToF).

“Time-of-flight is basically like echo location,” said Mehdi Asghari, CEO of FMCW lidar startup SiLC. “You send the pulse of light, you see how long it takes for the reflection to come back. And from that delay, you can determine how far away the object is. 95% of the market is using time-of-flight. The radar industry also started with time-of-flight and has now completely converted to FMCW. The main difference between lidar and radar is the frequency of the electromagnetic wave. This, is turn, forces many changes in technology implementation between the two.”

Despite its prevalence, ToF leaves both radar and lidar with performance vulnerabilities, often related to precision, said Asghari. “For example, when you have a pulse system, where you measure the light that comes back, the system doesn’t discriminate between your pulse and every other pulse that is out there emitted by other users. You may be measuring some other system’s pulse thinking it’s your pulse that came back. This is referred to as multi-user interference. ToF systems are also prone to performance degradation from background light, for example from direct sunlight.”

FMCW solves this issue by sending a highly coherent beam of light at a constant amplitude, shifting only the frequency versus time in a linear fashion. “You receive the reflected light that is effectively delayed versus what you are sending out, and you then mix them together coherently,” he said. “The returning beam is one that you emitted earlier, maybe by only a few microseconds, and hence at a different frequency than the one you are currently generating. When you beat these two beams in a coherent mixer, you get a beat signal that is proportional to the frequency shift between emitted and return beams. When you detect this in a coherence receiver, you measure the beat signal, because time/frequency is proportional to distance, so you get your depth information from measuring the frequency of the light.”

In other words, FMCW measures the frequency shift of light, not the time delay of a pulse of light, as ToF does. Being able to measure small changes in the frequency of light, also allows you to directly measure velocity or motion of objects.

“When you are sending the light toward a moving object, it compresses the frequency of the light that is coming back towards you. If the object is going away from you, it decompresses the frequency of the light that comes back towards you. If you’re able to measure this Doppler shift in the frequency of the light, you can make a direct measurement of both distance and velocity at the same time,” said Asghari.

That information is more difficult to obtain with a ToF system. “You have to make consecutive measurements, and then based on the change in the object distance, figure out if they’re coming toward you or going away, and by how much, and then figure out if there is velocity,” Asghari said. “If you didn’t measure a point accurately, or you have missing points or other inaccuracies, then your velocity measurement becomes problematic.”

And that’s where ToF systems can get expensive. To compensate, they push these systems toward very high refresh rates so they can make measurements very quickly and then average them to get an accurate velocity measurement. The problem is that creates a lot of data, which then needs to be processed. The problem is solved by using FMCW, which produces less but very accurate data, which requires less computation.

A key benefit of this instantaneous, direct velocity measurement is that it enables fast and predictive system reactions. For example, it can allow the systems to detect a child running after a ball in the road. Even a few milliseconds of advanced warning or faster reaction can mean the difference between life and death.

Fig. 2: ToF vs. FMCW Source: SiLC

Conclusion

Looking ahead, Cadence’s Lamant believes positive changes are coming because datacoms are forcing the foundries to ramp up volume, which will lower the price point of the die. “That opens up opportunities due to the much lower price, because currently not everybody is able to pay the price of a server. That’s going to drive an explosion of applications.”

Still, there are challenges that are too often overlooked. “EDA can play a role in solving those challenges,” Lamant said. “Photonics by itself serves no purpose. You cannot do anything that is just photonics. You need to have electronics around it, and that’s one of the biggest challenges. Most of the startups that I see failing, are failing because of an integration problem, not because they don’t have a good photonic device. They fail because they cannot build a system and mass produce it. It’s not that we’re running out of ideas for photonics. It’s that those smart people are smart in one domain, and they forget that they need the other domain. I have a barometer. When I see a startup where the CEO, the CTO, and most of the other people are photonics engineers, I have a strong suspicion they’re not going to make it. Everyone needs to remember that whether a component is part of datacom, or whether it’s a sensor, that component needs to talk to a whole system. This is where EDA can help. We have that whole ecosystem of which photonics is just one part.”

TrendForce 2024 Infrared Sensing Application Market and Branding Strategies

Release: 01 January 2024

Format: PDF

Language: Traditional Chinese / English

Page: 172

|

If you would like to know more details , please contact:

|