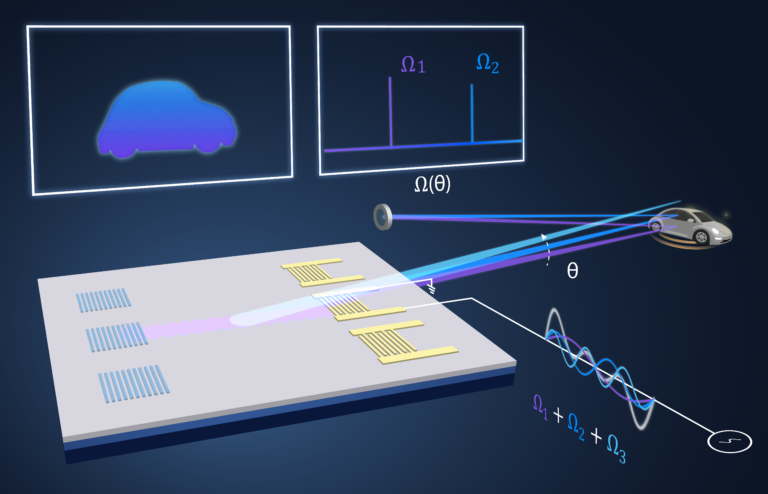

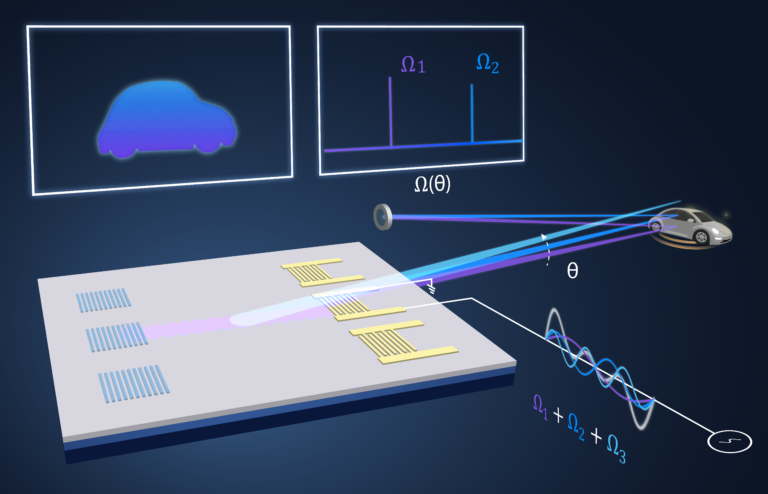

New LiDAR technology invented by University of Washington researchers integrates onto a computer chip and uses sound waves running over its surface to steer a laser beam, much like a searchlight, so self-driving cars can see objects such as pedestrians and other vehicles that are far in the distance. (Photo illustration courtesy of Bingzhao Li and Qixuan Lin)

Devices that move and operate in physical space need sensors to read their environment. One such sensing technology, LiDAR, has many potential applications, but is often too large or too expensive to be a viable choice.

Now, a team of researchers at University of Washington has developed a much smaller and less costly form of LiDAR that has no moving parts, a breakthrough that could soon be a real game-changer for many technologies.

LiDAR, which stands for Light Detection and Ranging, is a 3D laser imaging technology that has been around for over half a century. Like radar, which is an analogous radio wave-based means of sensing, LiDAR scans across an area and the reflected signal is then received and interpreted.

In the past, these laser-based systems have required moving parts — a factor that adds weight, complexity, durability, and expense.

Now, in a research study recently published in the journal Nature, a UW ECE (Electrical and Computer Engineering) research team developed a way to use quantum effects to create LiDAR on a chip — a lightweight approach that needs no moving parts.

“We have invented a completely new type of laser beam-steering device without any moving parts for scanning LiDAR systems and integrated it into a computer chip,” said Mo Li, a UW ECE and physics professor who leads the research team. “This new technology uses sound running on the surface of the chip to steer a scanning laser into free space. It can detect and image objects in three dimensions from over 100 meters away.”

Li is the ECE department’s associate chair for research and senior author of the Nature paper. Most of the experimental work for this study was conducted by co-lead authors Bingzhao Li, a postdoctoral scholar, and Qixuan Lin, a UW ECE graduate student. Both are members of the UW Laboratory of Photonic Systems led by Li.

Li compares the LiDAR to a searchlight moving back and forth across an area. The enormous difference with this LiDAR on a chip is the laser beam it emits can be bent using quantum effects.

Safe for eyes, the beam passes just barely above the surface of the chip. At the same time, an interdigital transducer (IDT) is used to excite acoustic waves on the chip. The generated vibrations “steer” the beam back and forth, according to their frequencies, with the movement occurring either continuously or in steps.

The beam subsequently reflects off objects in the environment, returning to the LiDAR where a detector receives the beam. Software then interprets the information, building up an image of the reflected object.

From left: Mo Li, Bingzhao Li, and Qixuan Lin.

Using a technique the research team calls “acousto-optic beam steering,” the chip generates high-frequency sound pulses of a few gigahertz, well beyond the audible range. These vibrations generate an emergent phenomenon called phonons, a quantum quasi-particle. The phonons alter the deflection of the photons that make up the light beam, bending it much as a prism would.

The scattering of the light mainly remains in the plane of the chip’s two-dimensional waveguide. Because of this, the light is moving through a quantum-scale medium created by the vibrations. The phonons alter the course of the beam according to their frequency, so that it sweeps across an angle resulting in about a 20-degree field of view.

Essentially, the vibrations create the equivalent of a diffraction grating, with the phonons altering the angle and wavelength of the laser’s coherent light.

This is the other crucial feature of this system because that change in wavelength also corresponds to a change in frequency. This occurs because of a well-known effect known as “Brillouin scattering.” Because of this, each slight difference in the beam angle effectively becomes “labeled” because it has different frequency photons.

This allows the returning photons to be detected using a single pixel camera. This information can then be processed by software to quickly build up images, including a picture of a scanned landscape.

“We can tell the direction of the reflected laser from its ‘color,’ a method we named ‘frequency-angular resolving,’” Qixuan Lin said. “Our receiver only needs a single imaging pixel, rather than a full camera, to image objects far away. Therefore, it is much smaller and cheaper than the LiDAR receivers commonly used today.”

This system currently has a range of about 110 meters. However, traveling at normal highway speeds, an autonomous vehicle typically needs a range about triple that. The present range limit stems from the system’s current efficiency of about five percent. Li thinks they’ll need another year to reach the 50% efficiency that will be required to achieve 300 meters. That’s a range that would give a vehicle traveling above 60 MPH enough time to respond to objects and situations in its path.

The commercial applications for this next-generation LiDAR include autonomous vehicles. Though Tesla CEO Elon Musk has famously said self-driving cars don’t need LiDAR, a low-cost version would probably negate that argument.

The significantly reduced weight of the system would also make it far more useful and viable in drones. Robotics could also utilize this new system in many different areas, from warehouse automation to military robots. The smaller scale and lack of moving parts also presents unique opportunities for medical imaging, as well.

Mo Li and Bingzhao Li plan to form a startup company to commercialize their technology within two to three years. They’ve already received seed grants for this purpose from CoMotion, the startup incubator at UW, and the Washington Research Foundation.

“The fact that it only took two students to make this happen in about a nine-month period speaks to the beauty and the simplicity of the technology,” Li said. “At its core, this device is not too complicated. It is a straightforward implementation of a good idea, and it works.”

The paper, “Frequency-angular resolving LiDAR using chip-scale acousto-optic beam steering,” was published in the journal Nature on June 28. The research is supported by the Convergence Accelerator Program of the National Science Foundation and the Microsystems Technology Office of DARPA.

TrendForce 2023 Infrared Sensing Application Market and Branding Strategies

Release: 01 January 2023

Format: PDF

Language: Traditional Chinese / English

Page: 164

|

If you would like to know more details , please contact:

|