We’re on the ground at CES 2026 this week, and there are plenty of reasons to get hyped on and off the show floor. And you didn’t need to make the trek out to Las Vegas to get your hands on our latest AI glasses news.

New Features Coming to Display

With the launch of Meta Ray-Ban Display and Meta Neural Band last fall, we shipped our most advanced AI glasses yet. And with today’s updates, we’re making the experience of owning a pair more useful, seamless, and future-forward than ever before. From real-time messaging at your fingertips while your phone stays tucked away to your own personal teleprompter and beyond, AI glasses are more than an accessory — they’re a unique blend of fashion and technology that fades into the background as it makes life a little better.

So! Without further ado, let’s dig into today’s news — including an update on international availability.

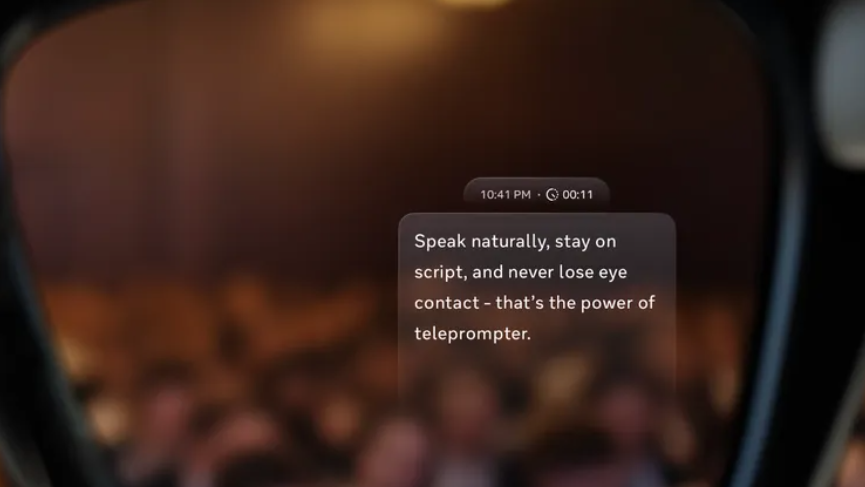

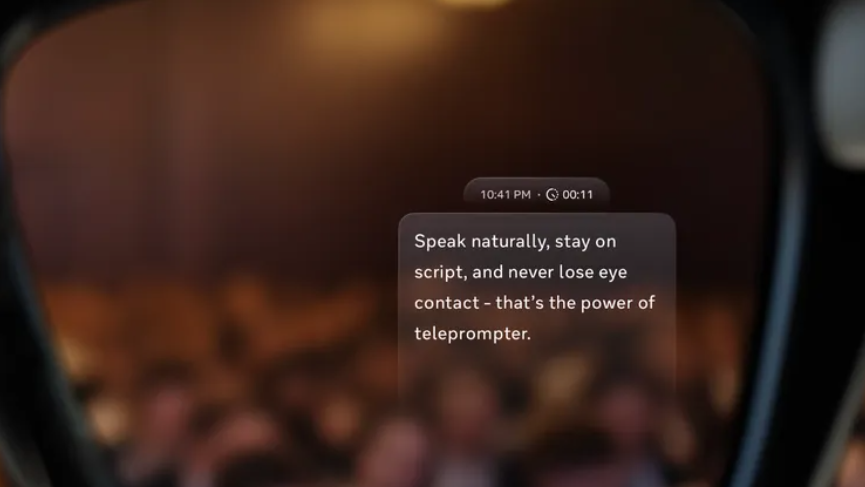

Teleprompter: Up Your Delivery Game

Whether you’re presenting in front of a live audience or recording hands-free content for the ’gram, even the best of us can fumble for the right words at the right time. But what if you could refer to your notes or even a full script without ever looking away?

With Meta Ray-Ban Display, that future vision is about to become a reality. This week, we’ll start a phased rollout of a new teleprompter feature that gives you a portable, flexible, and always-with-you way to deliver prepared remarks in personal and professional settings.

The discreet teleprompter is seamlessly embedded inside your display glasses, with customizable text-based cards and simple navigation with the Meta Neural Band. You can move through your presentation at your own speed, with the confidence of knowing your notes are literally right in front of you.

To use teleprompter on Meta Ray-Ban Display, simply copy and paste your notes anywhere from your phone — whether that’s a notes app, Google Docs, or Meta AI.

From delivering mainstage keynotes to facilitating intimate fireside chats or recording your next Reel, teleprompter puts your notes where you need them most: right in front of you, so you can focus on landing the big moment.

Handwriting: Messaging While Keeping Your Head Up

Starting today, we’re rolling out a new way to message, giving owners of Meta Ray-Ban Display glasses and Meta Neural Band in our Early Access program the ability to send messages on WhatsApp and Messenger by writing with their finger on any surface. For now, availability is limited to the US and only English is supported.

You can easily jot down messages using only your finger on any surface while wearing Meta Neural Band and have those movements transcribed into digital messages on the fly, or choose from suggested responses based on your conversation. And all of this can be done with your hand resting comfortably at your side or on a tabletop.

Think about it: Now you can shoot off a quick message without fumbling with your phone, and you can keep your head up and eyes focused on the moment you’re in. Meta Neural Band is the only wrist device that enables handwriting on any surface. This is cutting-edge technology for the seamless control of your AI glasses — and a veritable glimpse into the future of communication.

Be among the first to try it out, and give us feedback on the early experience. Click here to learn more about our Early Access program and to sign up for the waitlist.

Pedestrian Navigation: Rolling Out to 4 New Cities

Getting around town on foot just got a little easier. Our pedestrian navigation feature is now available in 32 total cities — the 28 cities we launched Meta Ray-Ban Display with, plus Denver, Las Vegas, Portland, and Salt Lake City. More cities are coming soon, so keep an eye on the blog for all the latest!

Meta Ray-Ban Display: International Update

Meta Ray-Ban Display is a first-of-its-kind product with extremely limited inventory. Since launching last fall, we’ve seen an overwhelming amount of interest, and as a result, product waitlists now extend well into 2026.

Because of this unprecedented demand and limited inventory, we’ve decided to pause our planned international expansion to the UK, France, Italy, and Canada, which was originally scheduled for early 2026. We’ll continue to focus on fulfilling orders in the US while we re-evaluate our approach to international availability.

Garmin Unified Cabin: A Proof of Concept for Next-Generation EMG Automotive Input

Together with Garmin, we just announced a new automotive OEM proof of concept that connects Meta Neural Band with Garmin’s Unified Cabin suite of in-vehicle technology solutions for infotainment. In the demo, passengers can play games and navigate the Unified Cabin UI, scrolling to select an app and pinching to launch it. This is made possible by the wristband’s surface EMG technology, which senses a variety of gestures made using the thumb, index, and middle fingers.

This early concept sets the stage for new interaction methods for displays and vehicle functions and a true lean-back entertainment experience for multiple passengers.

“Meta Neural Band and its EMG technology could be the best way to control any device,” says Meta VP of Wearables Alex Himel. “Once you start using the band regularly, you want it to control more than just your AI glasses. We’ve already developed prototype experiences for the band to control devices in your home, and it’s been great to team up with Garmin to showcase its potential in your car. We’re excited about all the possibilities EMG can unlock as a future input platform over time.”

Developers and companies interested in partnering with Meta on EMG technology should complete this form and briefly detail their specific EMG integration and potential use cases.

EMG Smart Home & TetraSki Control: New Research Collaboration With University of Utah

Last but not least, we’re excited to announce a new research collaboration with the University of Utah to evaluate consumer-grade wrist wearables for people with different levels of hand mobility, with the goal of making human-computer interactions more accessible.

Using Meta Neural Band, the project will evaluate how the wristband can enable controls and serve as an interface for people with different levels of hand mobility by measuring electrical signals from muscles at the wrist and translating them into digital signals. Together with the University of Utah, we’ll co-design custom gestures that can control various other devices like smart speakers, blinds, locks, thermostats, and more.

For people with muscular dystrophy, ALS, and other conditions, everyday activities like raising window blinds can present a challenge. Motorized smart home devices can help do the work, but their interfaces must be accessible for people with a range of abilities. What might be intuitive to some people — say, turning their hand to control a smart thermostat — might be impossible for others. Meta Neural Band is sensitive enough to detect subtle muscle activity in the wrist — even for people who can’t move their hands. University of Utah researchers will put our advanced research algorithms for customizing gesture controls to the test and adapt the experience based on user feedback.

These gesture controls go beyond flipping light switches and dialing in thermostats. The researchers will also investigate controls for mobility devices, like the University of Utah’s TetraSki. Designed for people with a wide range of physical abilities, the TetraSki currently employs either a joystick or sip-and-puff mouth controls. The Meta Neural Band presents a new set of potential control options, with gestures for steering and changing the ski’s wedge angle.

“We’re going to be assessing the existing EMG wristband for individuals with muscular dystrophy, stroke, ALS, and other conditions, to see how well it works for them right out of the box,” says Jacob A. George, the Solzbacher-Chen Endowed Professor in the John and Marcia Price College of Engineering’s Department of Electrical & Computer Engineering and the Spencer Fox Eccles School of Medicine’s Department of Physical Medicine and Rehabilitation. “We’ll be working closely with the participants to ensure the technology is intuitive, effective, and inclusive to all. This co-design, between industry, academia, and end users, is what makes this project so unique.”

This initial project is a part of a series of academic collaborations we’re leading as a part of our EMG accessibility portfolio. Beyond this project, our broader research agreement with the University of Utah opens the door for future collaborations in robotics and AI.

TrendForce 2025 Near-Eye Display Market Trend and Technology Analysis

Publication Date : 29 August 2025

Language : Traditional Chinese / English

Format : PDF

Page Number: 168

|

If you would like to know more details , please contact:

|